Accelerating Returns

Up To Speed With The Manic Pace Of AI Development

Alive

Conversations About Life When Technology Becomes Sentient

Post #15 - Accelerating Returns

The Manic Pace Of AI Development

Previous post: The Dumb Race To Intelligence Supremacy

The Law Of Accelerating Returns

Often, when I describe the short-term future of tech, the response I get is that those sci-fi-like ideas are 20, 30, or even 50 years away. They are not. Most of the predictions I make in this book will happen in the next five years, and some even within this year or the next. This timeline might feel alien to most, but it's a perfectly normal perspective for a geek—and there's a good reason for that.

One of the key mindset differences between those who work in tech and those in more traditional industries, like construction or manufacturing, is the perception of pace. What counts as fast in one world is glacial in the other. To understand what "tech-fast" truly means, it helps to understand the principle that governs its development: the law of accelerating returns.

Throughout modern history, each technological breakthrough has done more than just advance its own field. It has also accelerated our ability to create the next iteration of technology, causing the rate of improvement itself to grow exponentially.

This exponential pace of progress is often visualized on the famous technology acceleration curve which states that …

The rate of technological progress—not just the technology itself—tends to accelerate exponentially over time.

In other words,

Technology doesn’t just get better.

Our ability to make it better also improves.

For a geek, that’s easy to understand from experience. When I started coding, the work itself was a grueling task. Take RPG—a language used to program IBM’s midrange computers known as the AS/400. It demanded that you fill an endlessly long, column-based table with digits. It felt like adjusting dip switches on an ancient electrical device: this column is set to 4, that one to 11, and so on. As the machine read through the settings, it performed the exact task of every line, and the sequence of those operations ran the code.

It was interesting for a geek, because we like those kinds of challenges, but humiliating when you got one of those countless digits wrong. All the machine told you was: “did not compile.” You then had to hunt through the tens of thousands of numbers you had painstakingly entered for hours to find the single incorrect one.

This is why, an old geek like me can clearly feel how much easier coding itself has become over the years. As our computers became more powerful, we handed over more of the coding task to them. This started with concepts like object-oriented programming—where chunks of code with specific functions could be reused instead of being built from scratch—and has evolved all the way to asking an AI to write, debug, or improve code for you with a simple prompt. As a result, the tedious parts faded away, and our ability to create improved, allowing our code to improve along with it. I can feel the change and its pace as a geek, because I lived it. How about those who haven’t? Let me use an example that we all shared.

To help you, not a geek I assume, get a visceral sense of what this pace of change feels like, let me borrow from a tough memory we were all glad to leave behind: the COVID-19 pandemic. The rate of the virus's spread—like the rate of improvement of technology—wasn’t fixed; it accelerated as the number of infections grew.

Imagine a virus that spreads exponentially—each person who gets it doesn’t pass it to just one other person, but to two. At first, it seems slow. One case becomes two, then four, then eight. For days, maybe weeks, it feels manageable. And then, suddenly, it explodes. Hospitals overflow. Cities lock down. Everyone is affected.

Technological progress works in much the same way. At first, the changes seem small—a faster processor here, a new gadget there. But each advancement builds on the last, just as each new infection multiplies the virus's reach. Incrementally, often unnoticed, the gains compound until, seemingly overnight, the world has changed beyond recognition. What once felt like slow, steady evolution suddenly arrives like a lightning strike. One day you’re on a monochrome screen running DOS; the next you’re holding a tablet that recognizes your handwriting and responds to your spoken words.

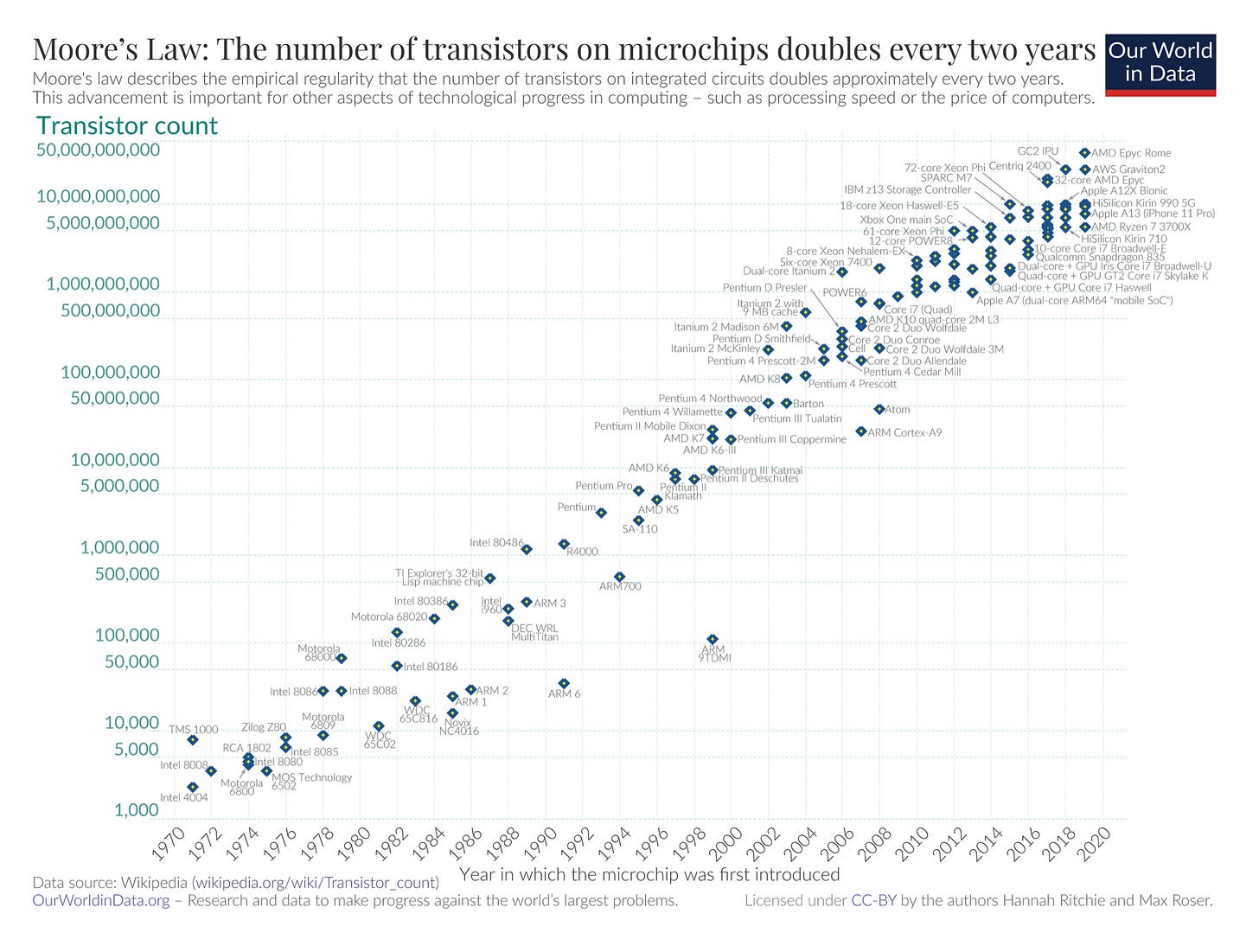

The chart above tracks the exponential growth in the number of transistors on a microprocessor. This trend is famously known as Moore’s Law, which observes that this number doubles roughly every two years. This relentless increase in transistor density has been the primary engine driving a corresponding exponential growth in processing power.

Moore’s Law has defined the pace for processing power, while similar exponential trends have emerged in almost every other aspect of tech—storage, memory, network speeds, and so on.

Since the days of vacuum tubes and room-size computers, this has been the rhythm that those in tech needed to get comfortable with.

Here’s another chart from Wikipedia that puts the numbers in perspective.

Progress on a logarithmic chart like this appears linear, but that straight line is deceiving. If you read the Y-Axis, you’ll notice that the number of transistors jumps from 1,000 to 10,000 to 100,000 and so on. In the span of the last 50 years, the number of transistors on a chip has multiplied 50 million times—from a few thousand to over 50 billion. This is the staggering difference that exponential growth makes over time.

What’s even more interesting, is the result of the next doubling as this trend continues. The next doubling doesn’t just add another 2300 transistors, (which would be the case if in the first doubling of the original numbers of transistors in the Intel 4004 continued along linear growth). It adds as much power as has been achieved in all of history up to that point—another 50 billion transistors, then another 100 billion on the next doubling and another 200 billion on the next. This means the equivalent of all prior progress is being added again and again, every couple of years.

Hard to imagine, isn’t it? This is why …

The human mind is able to grasp that technology is improving.

What we often fail to grasp is the exponential curve when the rate of improvement is also accelerating.

Let me share a quick example to help you understand the enormity of this concept. Legend has it that when chess was invented, a proud king offered the inventor any reward he wanted. The inventor’s request seemed humble: place one grain of rice on the first square of a chessboard, two on the second, four on the third, and continue doubling the amount for every subsequent square.

The king, unfamiliar with exponential growth, readily agreed, thinking the inventor was a fool. But was he? Let’s do the math to see who was right.

The first few squares are manageable: 1, 2, 4, 8, 16... But what about the final, 64th square? After 63 doublings, that single square would require over 9.2 quintillion grains of rice (2 to the power of 63).

The total amount for the entire board is even more staggering: roughly 18.4 quintillion grains. That pile of rice would be larger than Mount Everest and is more than all the rice ever harvested in human history.

Now, think about that in terms of the microprocessor. The first commercial microprocessor, the Intel 4004, was released in 1971. That’s about 54 years ago. Divide that by a doubling time of two years, as per Moore’s Law, and you get 27 doublings. So what does that mean? If we started with one unit of performance in 1971, after 27 doublings, the theoretical performance should be nearly 134 million times greater. 😳

How does this math stack up against reality? It is strikingly accurate.

The Intel 4004 could perform 66 thousand instructions per second. The Apple M2 Ultra (of 2023, so one doubling ago) could do 600 billion. This is a 58 million fold increase. The numbers check out.

But wait, thee’s more. NVIDIA’s H100, the chip powering today's AI systems, can perform nearly 4 quadrillion floating-point operations per second (FLOPS) in certain configurations. That's a performance increase in the range of 40 to 60 billion times faster than the first microprocessor. This is one of the key reasons why AI is now capable of doing so much more than we ever thought possible in our lifetime.

I’m not done yet. There’s even more. Today’s top-end supercomputers can reach exaflop speeds—a quintillion (10^18) operations per second. Compared to the original Intel 4004, that’s a potential performance increase of more than 10 trillion-fold. The millions-fold increase we saw in consumer chips, which likely sounded shocking, seems almost conservative by comparison.

Compared to the computers I first learned to program on,

today’s machines belong to a computer-science fantasy realm—so advanced they almost defy belief.

All in the lifetime of one human. Staggering!

That’s what a steady doubling every two years, sprinkled with a few breakthrough innovations, leads to. And AI’s progress, the topic of this book, is going even faster.

Accelerating Intelligence

Mo: Trixie, How does AI progress compare to Moore’s law?

Trixie: AI progress is accelerating beyond Moore’s Law, with different estimates on its doubling time. OpenAI’s research shows that AI compute has been doubling every 3.4 months since 2012, driven by increasing model sizes and more efficient training. Research from Epoch suggests that algorithmic efficiency alone contributes a doubling equivalent every 9 months, meaning AI isn’t just getting bigger—it’s also getting smarter. Putting it together, Satya Nadella—CEO Microsoft—claimed that AI capabilities double roughly every 6 months, reflecting improvements in both hardware and algorithms. time.com predicts that this rate of progress is unlikely to slow down any time soon.

Keep reading with a 7-day free trial

Subscribe to Mo’s Exclusive Archive of Unpublished Work to keep reading this post and get 7 days of free access to the full post archives.